Your Sitecore Website & SEO

TLDR: Take me to the Website SEO Readiness Checklist

This blog is for you, if you’re a Sitecore content author, marketing manager or coordinator who needs a little guidance in the way of setting your site up for SEO success.

Setting your website up for SEO success is commonly overlooked when launching a Sitecore website.

SEO is usually one of the items added to a phase 3 or 4 checklist for a site launch, or thrown in the ‘nice to have’ bucket rather than the priority bucket.

As much as we’d like to believe we’ll get to phase 4 eventually, the truth is that you probably won’t, and SEO is far too important if you want to get ahead.

You’re likely re-developing your site as an investment. Because you think a newly designed and developed site will acquire more leads, or convert more sales.

What you’re probably failing to realize is that you need to attract customers and leads to your site in the first place, and there’s no better way to do that than with SEO.

Before I get into how to ensure your Sitecore environment is set up for SEO success, let’s take a look at why you should even care about SEO.

Why Care About SEO?

Reason #1

People trust Google search results.

When you think of the first thing you do in your own sales journey, once you realize you have a problem and need to find a solution, it’s usually turning to trusty ‘ol Google.

You’ll likely do a Google search at every stage of your journey, from figuring out what the solution to your problem will be, to choosing a company or product/service to solve that solution for you.

Since most people are skeptical of ads today, they’re most likely to stick to organic search, with a staggering 90% of clicks coming from the first page of results (According to Moz). A pretty good reason not to ignore SEO.

Reason #2

SEO gives you an edge against your competition.

SEO can be a time-consuming practice for many businesses.

It needs constant attention since Google updates its algorithm multiple times a day.

New pieces of thought leadership and tactics are uncovered daily, so if you’re not staying on top of the latest trends, you won’t be able to keep your position.

It’s for this reason that many businesses choose to focus on media buying and advertising, rather than SEO, giving you another channel to use to get ahead of your competition, that they’re most likely ignoring.

The ones that aren’t ignoring SEO? You can bet your bottom dollar they’re acquiring more leads and making more sales.

Since most consumers start their buying journey with Google, optimized websites typically have more traffic and make more sales. That much is proven.

Reason #3

SEO improves your website’s user experience (UX).

Google rewards websites that have a great user experience.

In fact, that’s pretty much what SEO is all about. Google searches for the best webpages to display for searches, because it wants to provide its users with the best possible experience.

Most technical and on-page SEO elements that Google analyzes to determine which websites and webpages will rank highly, are based on factors that also affect user experience.

So optimizing your website for SEO will also improve your site’s performance on Google.

Now that you understand the importance of SEO, let’s explore how you can make your new site SEO friendly right from the get-go.

What We'll Cover

- Setting Your Website Up On Google Search Console

- XML Sitemaps

- Robots.txt Files

- Website UX & Site Speed

- 404 Errors

- Meta Descriptions

- Title Tags

- H1 Tags

- Canonicalization

- URL Structure

- Website Distribution

- Blog Content

- Bonus Item

Set Your Site Up To Perform For SEO

Set Your Website Up On Google Search Console

Google Search Console is the best free SEO tool to use if you want to get serious about SEO.

It provides you with reports to help you monitor your website’s performance on Google, as well as providing you with suggestions for fixing issues and errors that can be hindering your website’s performance.

You can also use Google Search Console to see what search terms visitors are searching for to get to your website, and see which URLs visitors access most from Google.

Use this handy little guide by Google to set your website up on Search Console.

XML Sitemap

Sometimes your website can be hard for Google to crawl, particularly if you don’t have a lot of internal links pointing to your pages.

XML sitemaps help search engines discover the important pages on your website faster by providing Google with a roadmap of all the URLs on your site.

This allows Google to easily understand your website’s structure, as well as find and crawl the pages on your site.

If you use SXA, your sitemap is generated for you and stored in cache. You can follow the steps in this Sitecore documentation article to extract your website’s sitemap.

Sitecore’s marketplace also has several extensions for extracting XML sitemaps. Unfortunately there aren't many options that work for versions 9.0+

What you can do in this case, is ask your developer to build a solution that runs a controller action on a schedule, and updates your sitemap once a day.

Another option is to use one of the many sitemap extractor tools out there, however most of them require a payment or subscription fee.

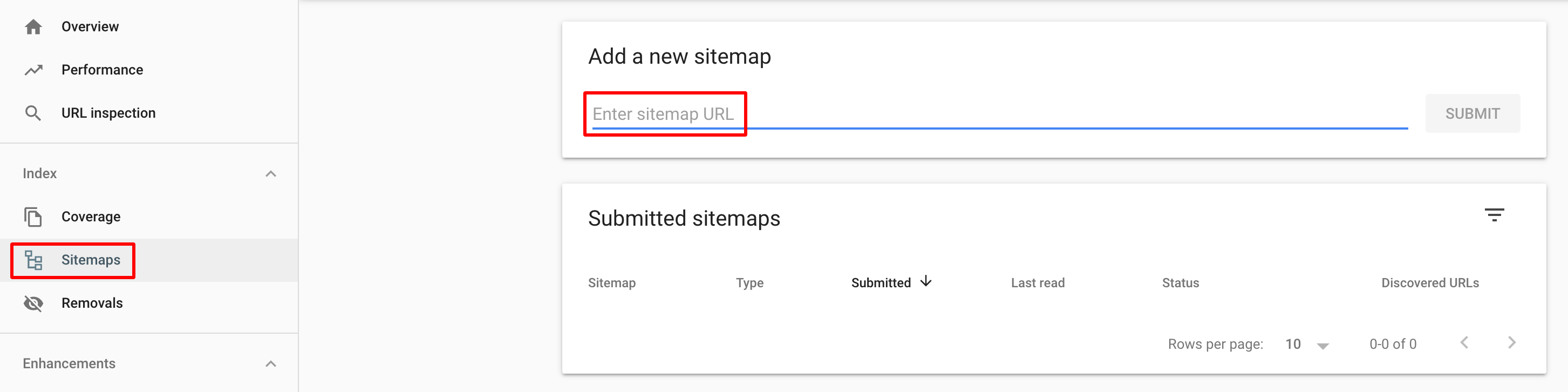

Once you’ve extracted your sitemap, submit it to Google Search Console by clicking on Sitemaps in the left side navigation under Indexing, and submitting the sitemap URL where prompted.

Robots.txt Files

The robots.txt file is part of the “robots exclusion protocol” (REP), a group of web standards that regulate how robots crawl your websites.

Robots.txt files let the spiders (web-crawling software) know which pages they can and cannot crawl. These crawl instructions are specified by “disallowing” or “allowing” the behaviour of certain (or all) spiders.

You may want to exclude pages where two versions of the same page exist. By disallowing one version, your pages won’t have to compete against each other in search rankings.

Ask your developers to add a robots.txt file to your site and add exclusions for any pages you do not want crawled.

Since prod pages and URLs can also be picked up by Google and flagged with 5xx errors (in Google Search Console), adding prod pages to the robots.txt file using rel="nofollow" tags is a great way to prevent pages from being indexed when they shouldn’t be.

Side note: Google Search Console (GSC) flags pages with warnings or errors depending on the severity of the issues Google has detected on the page.

Warnings will not immediately affect your position in search results, but errors do. I’ve seen pages with errors that have gone ignored be removed completely from Google, so take these ones seriously and resolve them as soon as you can.

Website UX & Site Speed

Google has announced that in 2021 an update will include a new ranking signal called “Page Experience” that collects data from the existing user experience metrics and takes one new one into account when ranking pages.

The ‘Core Web Vitals’ report in Google Search Console (under Enhancements in the left side navigation) can provide insights into how your website will be affected by the update.

The main factors that are included in the Core Web Vitals are:

- Largest Contentful Paint (LCP) – Measures loading performance. For a good user experience, sites should strive to have LCP occur in 2.5 seconds or less

- First Input Delay (FID) – Measures interactivity. For a good user experience, sites should strive to have an FID of less than 100 milliseconds

- Cumulative Layout Shift (CLS) – Measures visual stability. For a good user experience, sites should strive to have an CLS score of less than 0.1

Slow rendering sites can cause users to leave your website. Having quick load times can improve traffic volume and increase conversion rates. Ideal page load time should be less than 3 seconds.

Consider lazy-loading offscreen and hidden images after all critical resources have finished loading to lower Time to Interactive. You can lazy load pages using this CSS code: content-visibility:auto or <img src="myimage.jpg" loading="lazy" alt="..."> <iframe src="content.html" loading="lazy"></iframe>

It’s important to note that this doesn’t work for all browsers and browser versions. If you want to check which browsers and versions this will work for, put it through this handy tool.

To improve site speed and page experience:

- Configure a CDN to properly cache all media and css such as Cloudflare

- Implement ‘read more’ buttons on pages

- Rethink how large sections of the HTML are generated

- Hide sections of the page from the browser until the first load finishes

- Improve slow server response times

- Increase resource load times (i.e. images, videos) by keeping images small (you can use tinypng.com to compress image file sizes)

404 Errors

404 is the status code for a “page not found” error. When a 404 error occurs, this is a result of a link on the page being broken. This means that the page it’s linked to is no longer active.

The best way to prevent 404 errors from happening is to use Sitecore’s internal linking feature when possible, to link to other items.

This will display a warning message if you try to delete the item that links to other items, or the item that has other items linking to it.

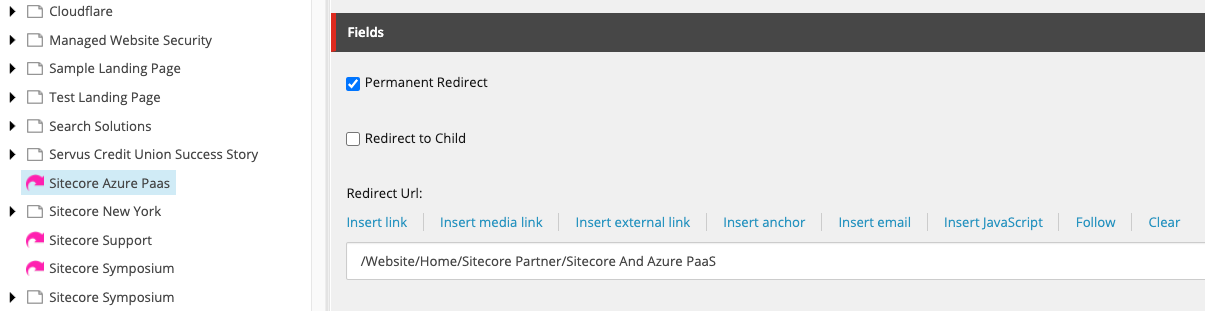

Another way to prevent 404 errors is to set up 301 (permanent) redirects as an alternative to completely taking a page down / deleting it.

You'll have to ask your developer to develop the ability for you to add a 301 redirect, and there are two main ways you can do it.

You can add a 301 redirect item in the content tree of the content editor, like you can see in the screenshot below. This way you would name the redirect item the same name as the page you want to redirect, and then specify the URL to redirect to on the item:

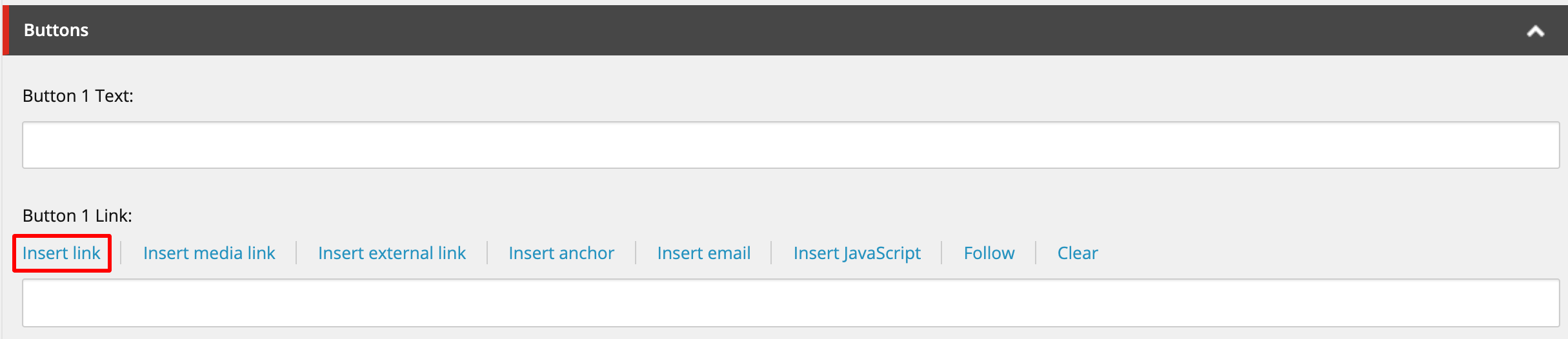

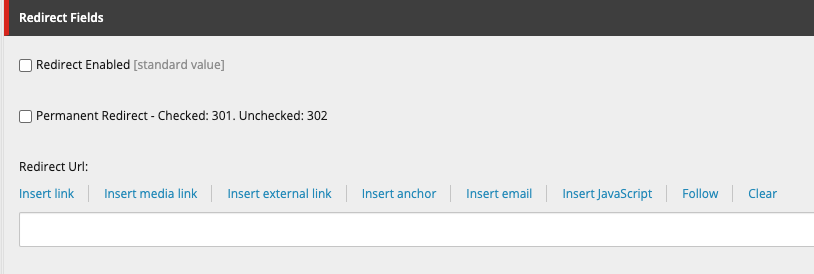

The other option is to ask your developer to create a field on a page, that allows you to add a redirect URL straight onto the page:

Meta Descriptions

A meta description tag is a short summary of a webpage's content that helps search engines understand what the page is about and can be shown to users in search results. It’s used by search engines to display your page's description in search results.

A good description helps users know what your page is about and encourages them to click on it.

If your page's meta description tag is missing, search engines will usually display its first sentence, which may be irrelevant and unappealing to users.

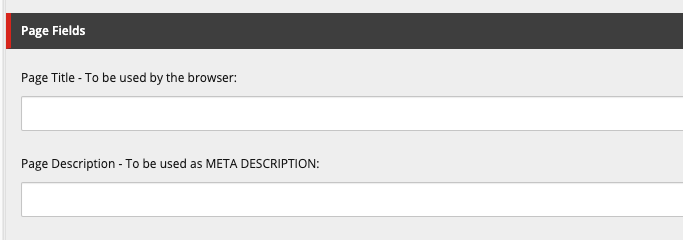

Ask your developer to create a field that allows you to add a meta description on a page, like this field here:

It's best to group the page title and meta description together like they appear in the screenshot above.

While missing meta descriptions are bad for SEO, duplicate meta descriptions are worse. They make it difficult for search engines and users to differentiate between different webpages.

Follow these best practices for meta descriptions:

- Provide a unique, relevant meta description for each of your webpages

- In order to gain a higher click-through rate, you should ensure that all of your web pages have meta descriptions that contain relevant keywords

- We recommend that the description should fit into the snippet’s 156 character limit for the SERP

Title Tags

A `Duplicate title tags make it difficult for search engines to determine which pages are relevant for a specific search query, and which ones should be prioritized in search results.

Pages with duplicate titles have a lower chance of ranking well and are at risk of being banned.

Most search engines truncate titles containing more than 70 characters. Incomplete and shortened titles look unappealing to users and won't entice them to click through to your page.

Generally, using short titles on web pages is a recommended practice. However, keep in mind that titles containing 10 characters or less do not provide enough information about what your webpage is about and limit your page's potential to show up in search results for different keywords.

Follow these best practices for title tags: <ul><li>Ensure that every page on your website has a unique and concise title containing your most important keywords</li> <li>Ensure that content for title and h1 tags are different from each other (no exact matches)</li> <li>Rewrite page titles to be 70 characters or less</li> <li>Add descriptive text inside your page's title tag</li></ul>

<hr>

<h3 class="blue">H1 Tags</h3>

Having a relevant <h1> tag on your website’s pages helps your website rank better in search results.

H1 tags are important for SEO because they tell both search engines and website visitors what the content of pages will be about.

Follow these best practices for using h1 tags: <ul><li>Don't duplicate your title tag content in your first-level header. If your page's title and h1tags match exactly, the latter may appear over-optimized to search engines. Using the exact same content in titles and headers is a lost opportunity to incorporate other relevant keywords for your page, so ensure you have different content in your title and h1 tags.</li> <li>While less important than title tags, h1 headings still help to define your page’s topic for search engines and users. If an h1 tag is empty or missing, search engines may place your page lower than they would otherwise. Besides, a lack of an h1 tag breaks your page’s heading hierarchy, which isn't SEO friendly.</li> <li>Although multiple h1 tags are allowed in HTML5, for SEO it's best to stick to only one h1 tag per page.</li></ul>

<hr>

<h3 class="blue">Canonicalization</h3>

Webpages are considered duplicates if their content is 85% identical, and having duplicate content may significantly affect your SEO performance.

'Identical pages' doesn't only refer to separate pages that have the same content. If a page is accessible by multiple URLs, Google still considers this to be duplicate content.

Confused yet? Let's look at some examples of common instances where you may have duplicate URLs being produced for each page:

https://www.example.com/example page

https://www.example.com/Example Page

https://www.example.com/example-page

https://www.example.com/example%20page

http://www.example.com/example-page

https://example.com/example-page?utm....

If you have a web page accessible by multiple URLs, or different pages with similar content (i.e. separate mobile and desktop versions or http and https), you should specify which URL is authoritative (canonical) for that page.

To tell Google which URL is the master copy of the page, add a tag called a “rel canonical tag” to every page on your site.

Here’s an example of what the tag looks like:

<link rel="canonical" href="https://www.example.com/example-page”/>

This tag should be added to every page, with the URL referenced in the href attribute, updated to the correct URL for that page.

If you're serious about SEO, every page on your site should have a canonical tag. And yes, most of the tags will be self referencing.

Ask your developer to add a field into your Sitecore instance like the one in this example here: <img src="https://edge.sitecorecloud.io/fishtankconc21d-getfishtanknext-prod-f347/media/getfishtank/blog/why-your-sitecore-site-needs-canonical-tags/canonical-tag-field.png" alt="canonical tag field" width="75%" height="75%">

<p>You can then add in the correct or ‘canonical’ URL for every page.</p>

For a more detailed explanation of canonicalization, read our <a href="https://getfishtank.ca/blog/why-your-sitecore-site-needs-canonical-tags" target="_blank">'why your Sitecore site needs canonical tags' blog</a>.

<hr>

<h3 class="blue">Structured Markup (Schema)</h3> There's a limit of how much webpage content search engines can understand. You can help Google read your content by using structured markup. Structured markup (or schema) is a snippet of JSON-LD structured data, that you fill with descriptions of the page content, such as the title and author, as well as other details about the content.

As content authors, we usually don't have direct access to edit the source code of our websites. Ask your developer to build a field on your pages, where you can add and update the structured data. You can even ask them to add a template for the structured data as a default, so all you have to do is go through and fill it in with relevant details of the content.

Use structured markup on pages for articles, events, and products. A full listing of schema types can be found <a href="https://schema.org/docs/schemas.html" target="_blank">here</a> and helpful tips for the correct syntax can be found <a href="https://developers.google.com/search/docs/data-types/article">here</a>.

<hr>

<h3 class="blue">URL Structure</h3> In order for URLs to be SEO friendly, they should be clearly named for what they are and contain no spaces, underscores, or other characters. You should avoid the use of parameters when possible, as they make URLs less inviting for users to click or share.

URLs are typically encoded with %20 when a space or special character is added to a URL.This can clutter URLs and make them visually less appealing, which can lead to a lower click-through rate (CTR).

Build links that contain hyphens rather than underscores or spaces, and ask your developer to redirect all URLs with incorrect spacing or special characters to the hyphenated version of the URL, rather than falling back on the %20 encoding.

<hr>

<h3 class="blue">Website Distribution</h3> Website distribution shows how many clicks it takes to get to a page on your site from the home page. Typically, better performing pages are closer to the homepage, with deeper pages acting as supporting content.

Pages with a crawl depth of "4+" are less likely to be crawled by search engine robots or reached by a user.

Evaluate the content structure of the site prior to go-live to ensure that important pages are not buried too deep for users to find. Move more significant pages higher in the site’s architecture.

<hr>

<h3 class="blue">Blog Content</h3> Having a blog or insight article area on the site where you can write blogs and long-form content is really important for SEO. Blogs allow you to write an infinite amount of content on a topic, that you can use to build 'content clusters' and use to rank for long-tail keywords.

Content clusters are supporting pieces of content (like blogs) that you should link back to the primary topic landing page, known as a 'pillar page'. The more content you write about a topic and link back to the 'pillar page', the more you signal to Google that your website is an authoritative source on a topic, resulting in SEO credibility.

Blogs are also a great way to acquire traffic, so make sure you develop a blog or place to add long-form article content that you can easily add internal links to.

<hr>

<h3 class="blue">Wrap Up</h3> Phew - we made it! Thanks for getting this far. We've covered a lot today, so I hope you aren't feeling too overwhelmed. SEO is a big world once you start to delve deep, and you'll be doing yourself a big favour by setting your site up for success right from go-live.

If you're feeling overwhelmed by all this new information and have a few questions, please reach out to me on Twitter <a href="https://twitter.com/natmankowski" target="_blank">@natmankowski</a>, I'd be more than happy to help!

<hr>

<h3 id="tldr" class="blue">Bonus! Website SEO Readiness Checklist</h3>

<a href="/-/media/GetFishtank/Blog/How-To-Set-Your-Sitecore-Instance-Up-For-SEO-Success/Sitecore-SEO Readiness-Checklist.pdf">Download a printable version of the checklist here.</a>

<ol><li>Set my website up on Google Search Console</li> <li>Extract my site map & upload it to Search Console</li> <li>Add a robots.txt file and exclude any pages that I don't want googlebot to crawl</li> <li>Keep my images small by compressing them on <a href="https://tinypng.com/" target="_blank">tinypng.com</a></li> <li>Set up image lazy loading</li> <li>Configure a CDN to properly cache all media and css such as <a href="https://www.cloudflare.com/" target="_blank">Cloudflare</a></li> <li>Add a field or item for performing 301 redirects</li> <li>Ensure I use Sitecore's internal linking function when hyperlinking to other pages</li> <li>Add a field for page titles and meta descriptions</li> <li>Ensure all pages have a unique meta description with relevant keywords, that is 156 characters or less</li> <li>Add unique page titles with relevant & descriptive keywords, that are 70 characters or less. Ensure they are different to my h1 tag</li> <li>Ensure I have unique h1 tags on every page, and that I only use one h1 tag per page</li> <li>Set a field up for adding "rel canonical tags" to pages, and ensure I have this field filled in for every page</li> <li>Set a field up for adding schema to pages. Ensure the schema structured data is complete for all relevant pages</li> <li>Ask your developer to redirect all URLs with incorrect spacing or special characters to the hyphenated version of the URL, rather than falling back on the %20 encoding</li> <li>Ensure significant pages aren't buried too deep in the URL or website structure and move more significant pages higher in the site’s architecture</li> <li>Set up an ability to write and publish long-form content such as blogs or articles. Use these to target long-tail relevant keywords and build content clusters</li></ol>